UPDATE from Dec 22, 2016: Since the original publication of this article there have been some significant market updates which need to be considered. Google bought Api.ai and also released their own home-baked Cloud Natural Language API, Amazon introduced Amazon Lex – conversational API and Wit.ai is updating their Stories and making them even better.

Recent announcements of a bot framework for Skype from Microsoft and a Messaging Platform for Messenger from Facebook have transformed chat through a new platform. More and more developers are coming up with the idea to make their own bot for Slack, Telegram, Skype, Kik, Messenger and, probably, several other platforms that might pop up over the next couple of months.

Thus, we have a rising interest in the under-explored potential of making smart bots with AI capabilities and conversational human-computer interaction as the main paradigm.

In order to build a good conversational interface we need to look beyond a simple search by a substring or regular expressions that we usually use while dealing with strings.

The task of understanding spoken language and free text conversation in plain English is not as straightforward as it might seem at first glance.

Below we look at a possible dialogue structure and demonstrate how to understand the concepts behind advanced natural language processing tools. We also focus on the platforms that we can use for our bots today, including the API – LUIS from Microsoft, Wit.ai from Facebook, Api.ai from Assistant team Google, Watson from IBM and Alexa Skill Set, and Lex from Amazon.

Ready to build a conversational bot for your business, but confused with the variety of platforms? Let’s talk!

A Dialogue Example

Let’s look at the ways we can ask a system to find ‘asian food near me.’ The variety of search phrases and utterances could look similar to this:

- Asian food near me please

- Food delivery place not far from here

- Thai restaurants in my neighborhood

- Indian restaurant nearby

- Sushi express places please

- Places with asian cuisine

- Etc.

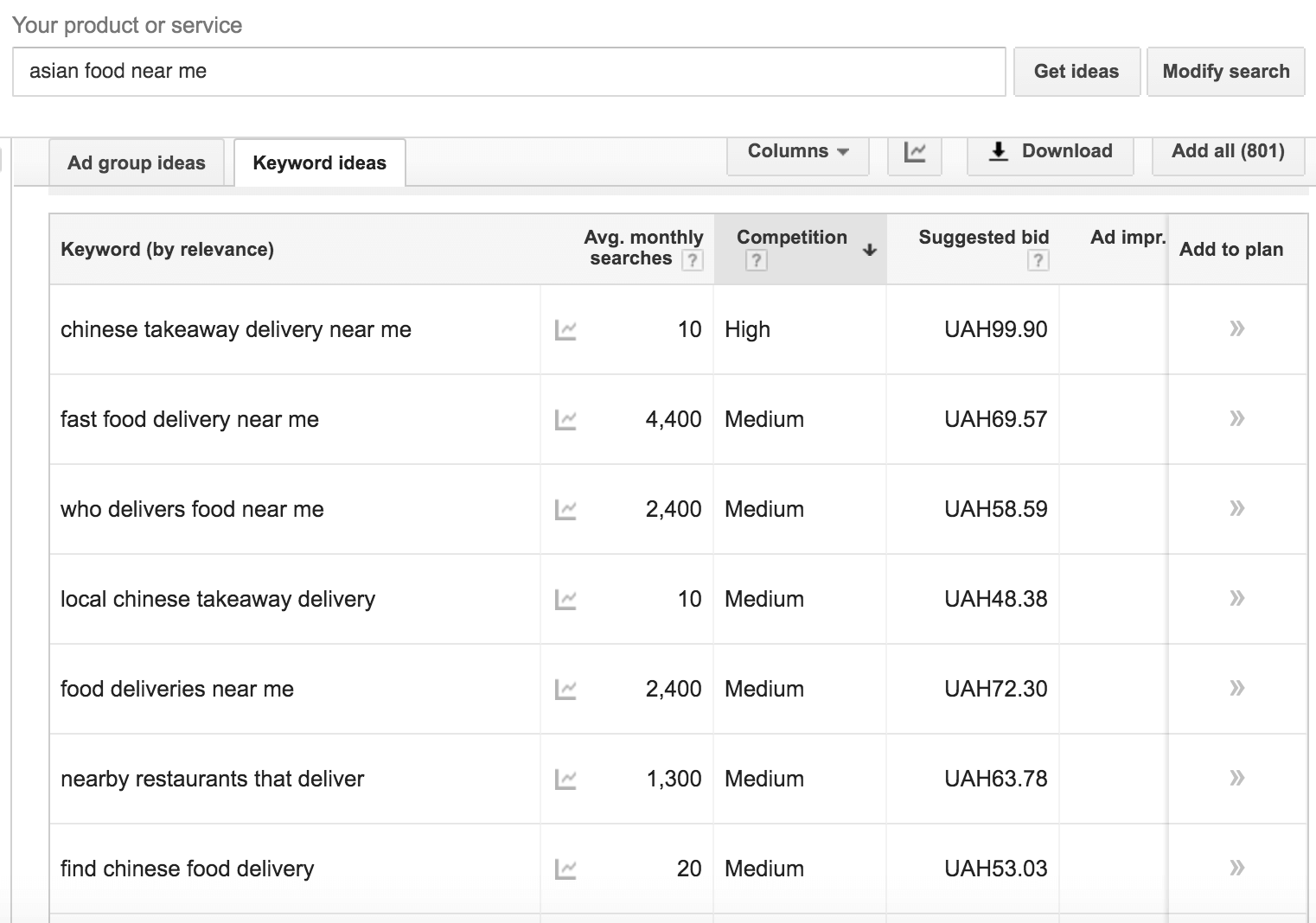

But if we are curious enough, we can also ask Google Keyword Planner for other related ideas and extend our list by about 800 phrases related to the search term “asian food near me”. We use Keyword Planner for such tasks here because it is a great source of aggregated searches that users regularly perform in Google.

Of course, not all of this is directly related to the original search intent, asian food near me. Let’s say, however, that we want to create a curated list of Asian Food places; in this case we can see that the results are still highly relevant to the service that we want to provide to the users.

So therefore we can try to steer the conversation towards the desired ‘asian food’ topic with the help of questions and suggestions from the bot.

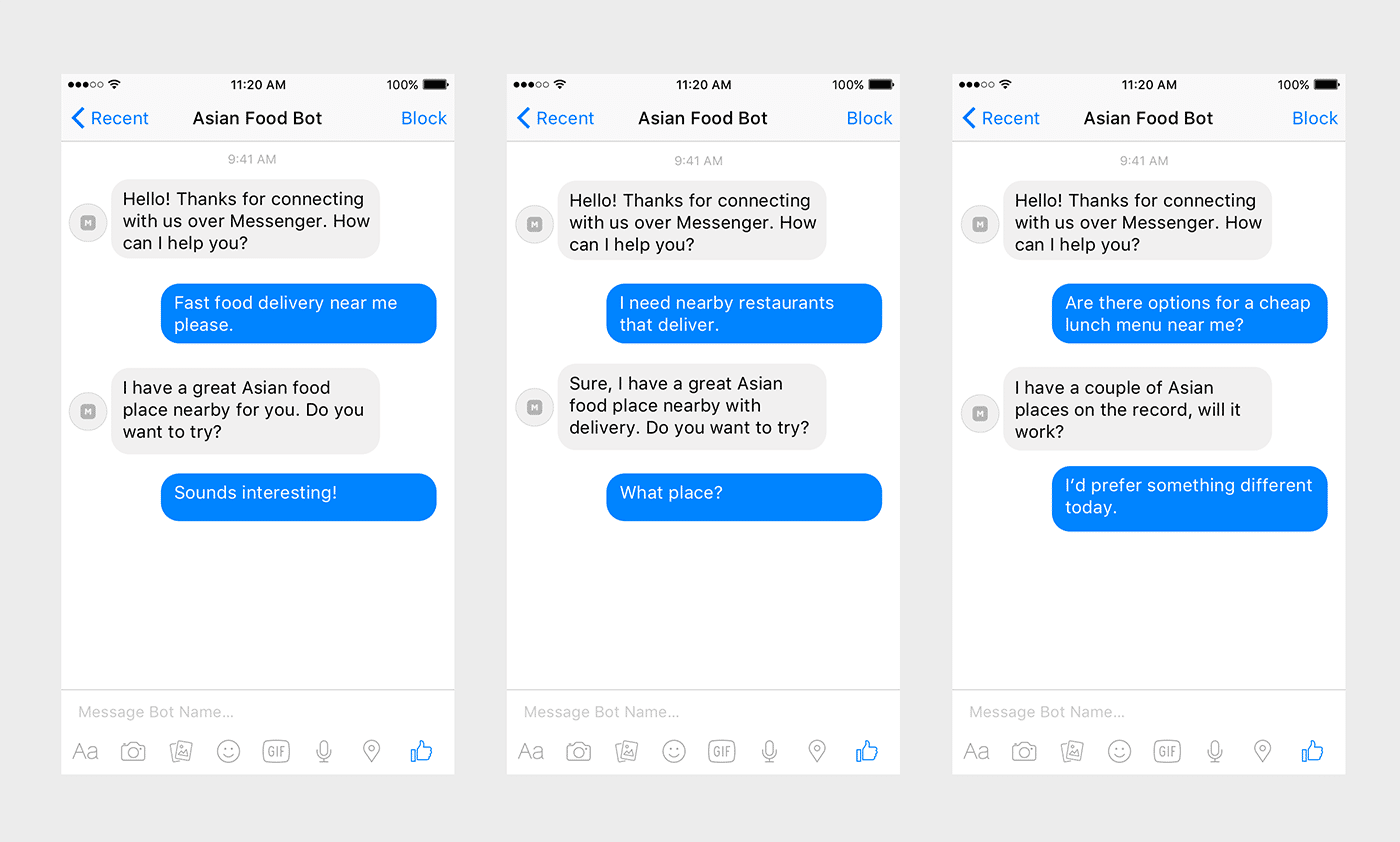

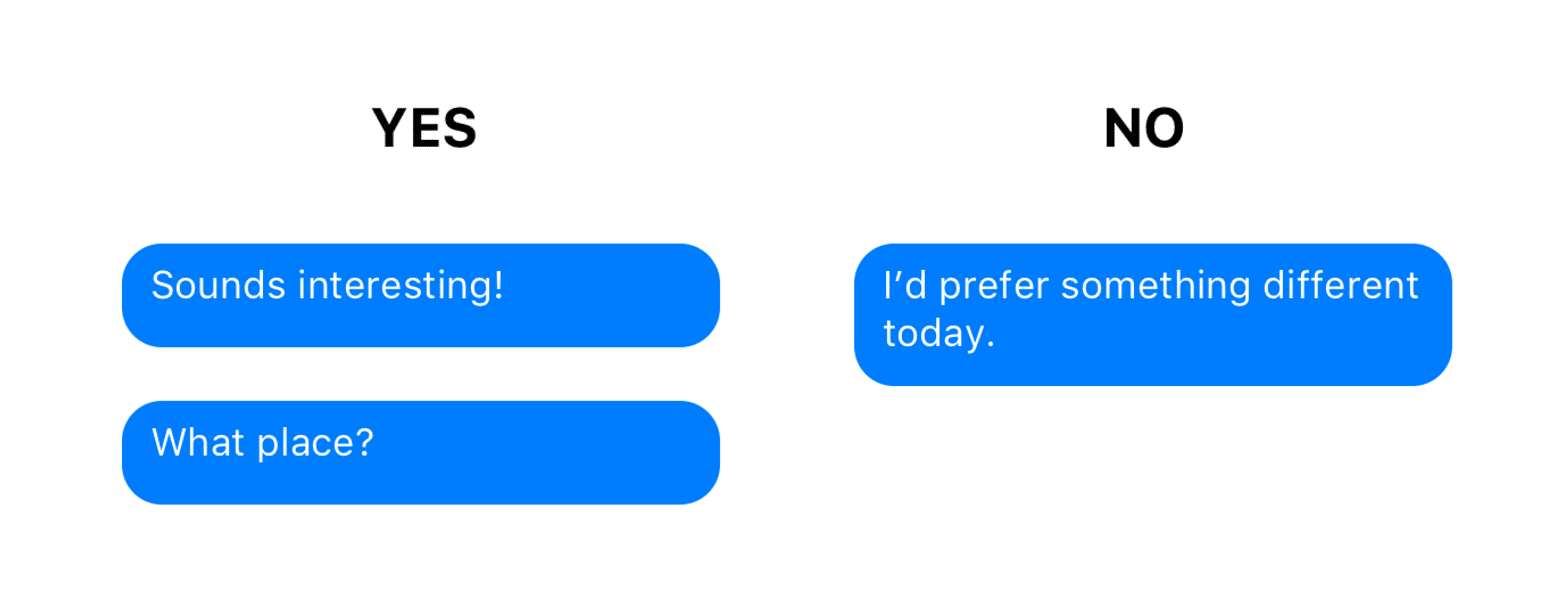

Consider the next dialogue examples and suggestions of ways to direct the conversation:

From the example above we see how a broad variety of utterances can be employed by the user for the purpose of finding food.

Also notice how users can say Yes and No during the dialogue for confirmation or decline of the suggested option.

It is clear that chatbots need some way of understanding the language and conversational phrases that are more sophisticated than just a simple text search by phrase or even regular expressions.

Dialogue Structure as NLP engineers see it

From the example above we can see that each expression from the users has the intent to take some action.

An Intent is the core concept in building the conversational UI in chat systems, so the first thing that we can do with the incoming message from the user is to understand its Intent. This means mapping a phrase to a specific action that we can really provide.

Along with the Intent, it’s necessary to extract the parameters of actions from the phrase. In the previous example with ‘asian food’, the words ‘nearby’ or ‘near me’ correspond to the current location of the user.

Parameters, also called entities, often belong to a particular type. Examples of entity types that are commonly supported in language understanding systems are:

- Location

- Datetime

- Number

- Enumeration (predefined list of named things)

- Contact

- Distance

- Duration

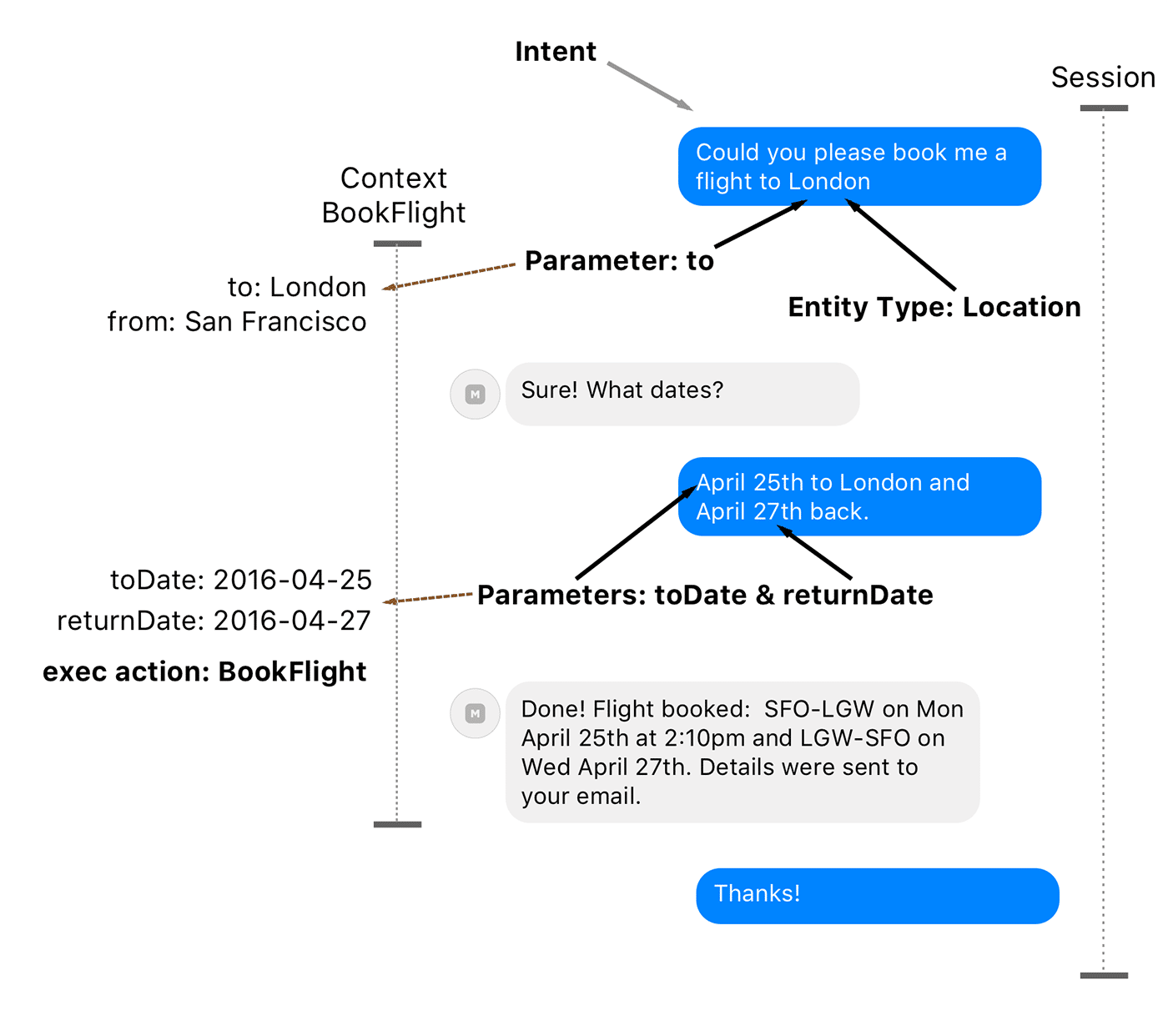

Here are the basic representations of the Intent, Entities and Parameters, as well as Sessions and Contexts which we will discuss later.

A Session usually represents one conversation from beginning to end. An example of one session is when you order a flight from your starting point: ‘I need a flight to London’ (the intent), then through subsequent interactions (questions and answers) you get the information about a booked flight and finish the interaction.

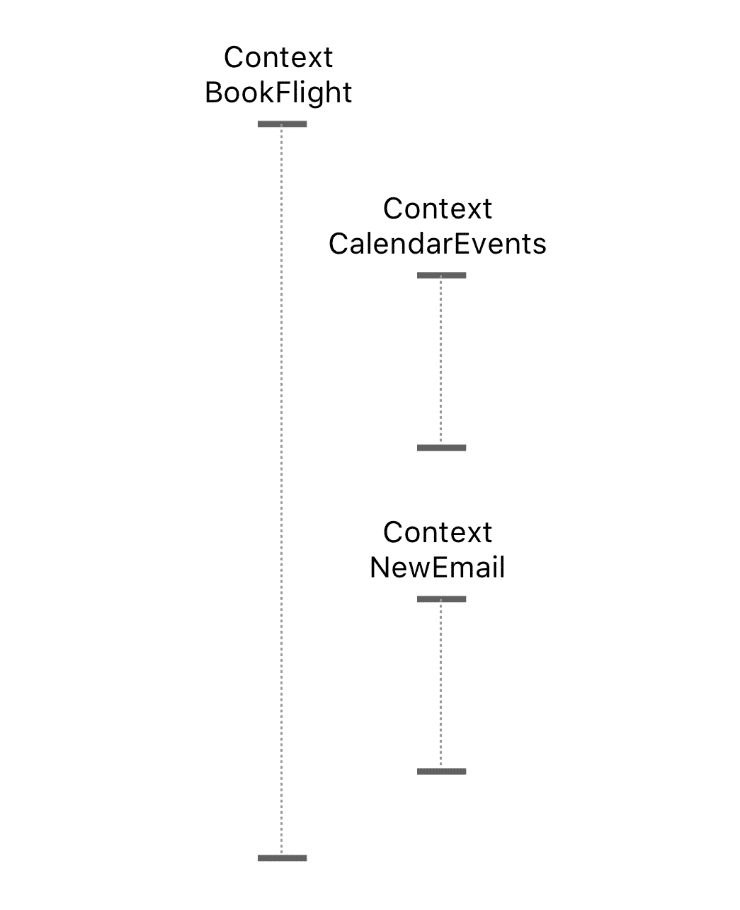

For storing the intermediate states and parameters from previous expressions during the dialogue we usually use Context. We can think about context as a shared basket that we carry through the whole session and use as short term memory. For example, during the flight booking chat, we can store the intent BookFlight in a context and subsequently add other parameters (like FlightDates, FlightDestination, NumberOfStops or MinMaxPrice) from the conversation once we get them from the user.

Unlike a session we can have many contexts during one conversation that nest into one another. Let’s say, after the user expression that represents the BookFlight intent, we started a new context, BookFlightContext, which indicates that we are currently collecting all parameters needed for the booking.

After the question about flight dates, the user decides to request info from the calendar, thus expressing a new intent CalendarEvents, and starting a new context, CalendarEventsContext, that saves the state of user interaction during the dialogue about events in a calendar. The user can even decide to reschedule several events and write a short email to involved parties with apologies and a reason for rescheduling, thus creating another nested context object, NewEmailContext.

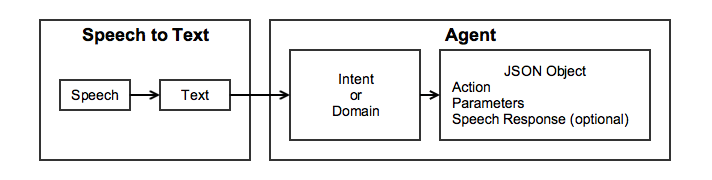

So some of the technical tasks of the chat bot app (or conversational agent) are:

- Understand the language in a plain text (or voice translated into text) as well as the Intent with Parameters.

- Process the Intent with Parameters and execute the next action to continue a dialogue with the user. (Result is a response or a subsequent question to continue the conversation by getting more data from the user and filling needed parameters in order to fulfill the action).

- Maintain the Context and its state with all parameters received during the single Session in order to get the required result to the user.

Next, we will look at how the available tools can help us with all of this.

Microsoft Language Understanding Intelligent Service (LUIS)

LUIS was introduced during this year’s Microsoft Build 2016 event in San Francisco, together with Microsoft Bot Framework and Skype Developer Platform, which can be used to create Skype Bots. In this article we leave aside Bot Framework and look at language understanding features from LUIS.

LUIS provides Entities that you can define and then teach to recognize a LUIS system from a free-text expression. There are also Hierarchical Entities that are helpful for recognizing different types or sub-groups. For instance, a FlightDate entity can have a ToDate and a FromDate which can be recognized separately.

Currently, there are limitations of up to 10 Entities of each type per application, which will be enough for a middle-size service.

Besides Intents and Entities, there is also the concept of Actions that can be triggered by the system once the Intent and all required parameters are present.

Moving closer to automatic language understanding and the acting upon completion of Intents with parameters, there is another feature called Action Fulfilment, which is currently present only in preview mode, but you can already play with it and plan for the future. The idea is that once we have an Intent the system can automatically execute predefined Channel Actions like GetCurrentWeather, GetNews or your own JsonRequest to an arbitrary API.

Dialogue support, which also presents only in a preview mode, can help us to organize the conversation and ask relevant questions to the user in order to fill in the missing parameters for the intent.

To train the model with different utterances, LUIS provides a Web interface where we can type an expression, see an output from the model, and make changes in labels or assign new intents. Additionally, LUIS stores all incoming expressions in the Logs section and provides semi-automatic learning features with Suggestion, where the system tries to predict the correct intents that are already present in the model.

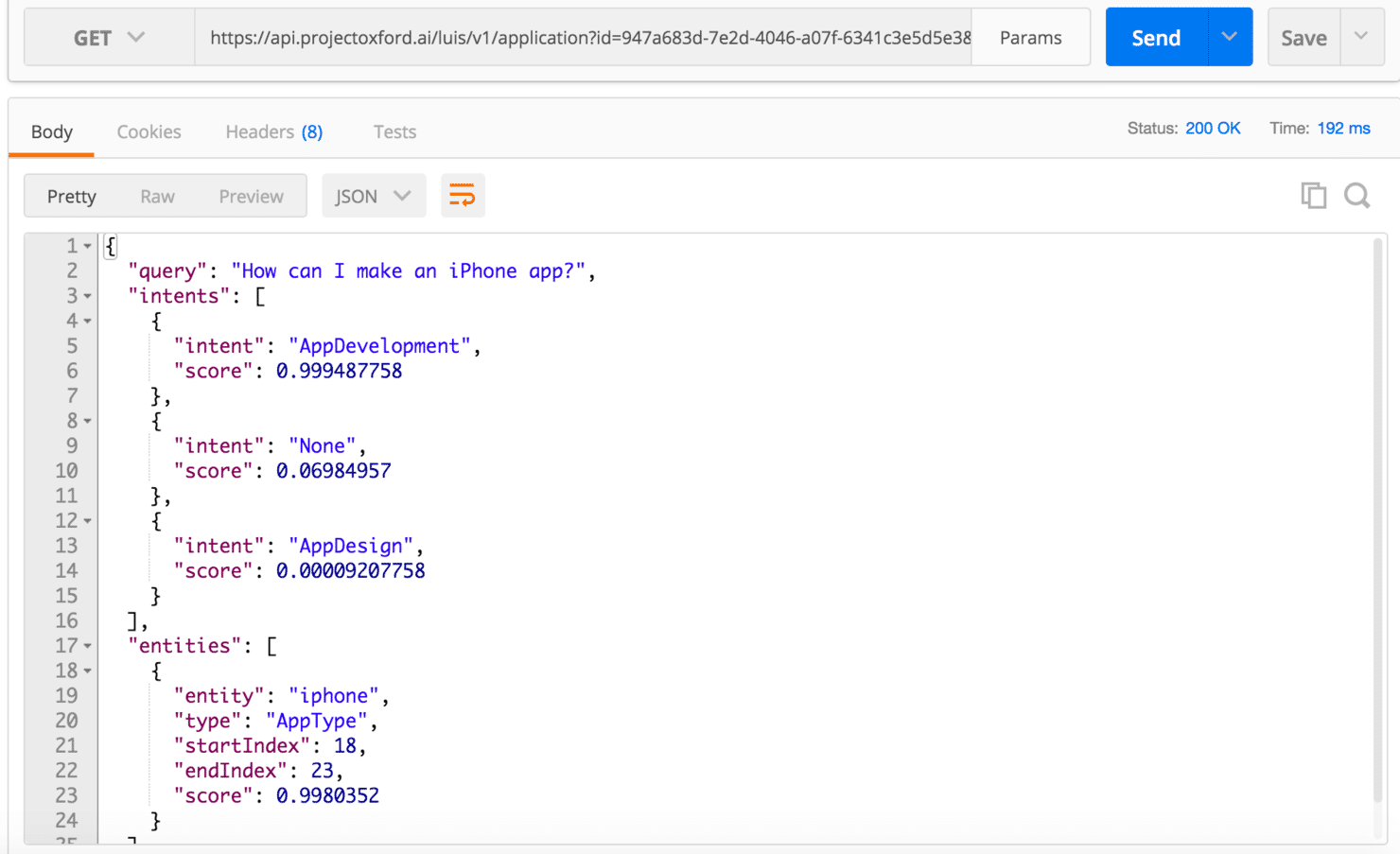

Once we have the trained model, we can use the API to ask questions and receive intents, entities and actions with parameters for each expression as an input.

LUIS has the export/import feature for the trained model in a plain JSON with all expressions and markups for entities, which we can then repurpose in our code – or even substitute LUIS completely, if we decide later to build our own NLP engine.

Currently, LUIS is in beta and free to use for up to 100k requests per month and up to 5 requests per second for each account.

Next we will look at Wit.ai from Facebook.

Facebook Wit.ai Bot Engine

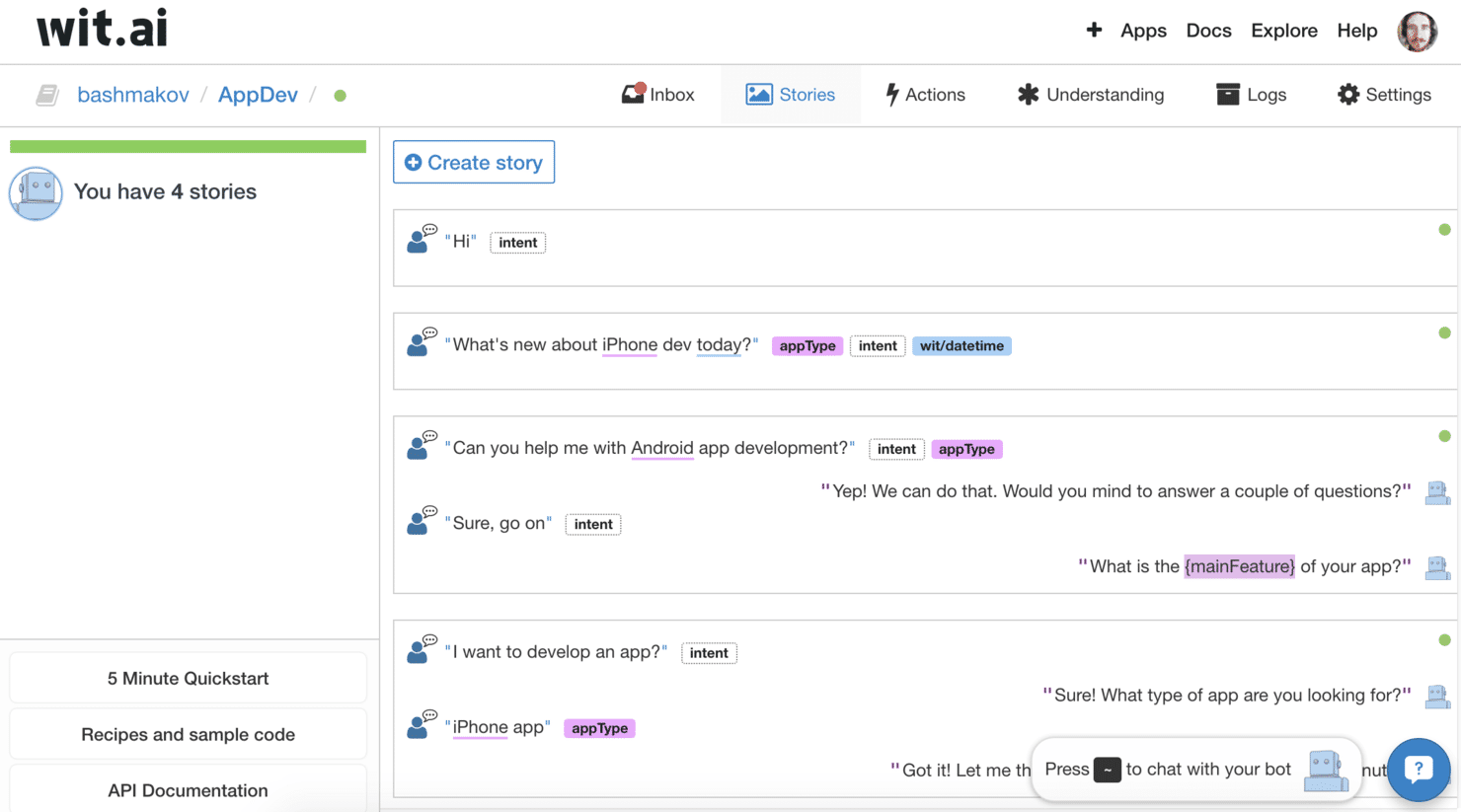

Wit.ai, an AI startup that aims to help developers with Natural Language Processing tasks through the API, was acquired by Facebook in January 2015. During the F8 conference in April, 2016, Facebook introduced a major update to their platform and rolled out their own version of Bot Engine that extends a previous intent-oriented approach to the story-oriented approach.

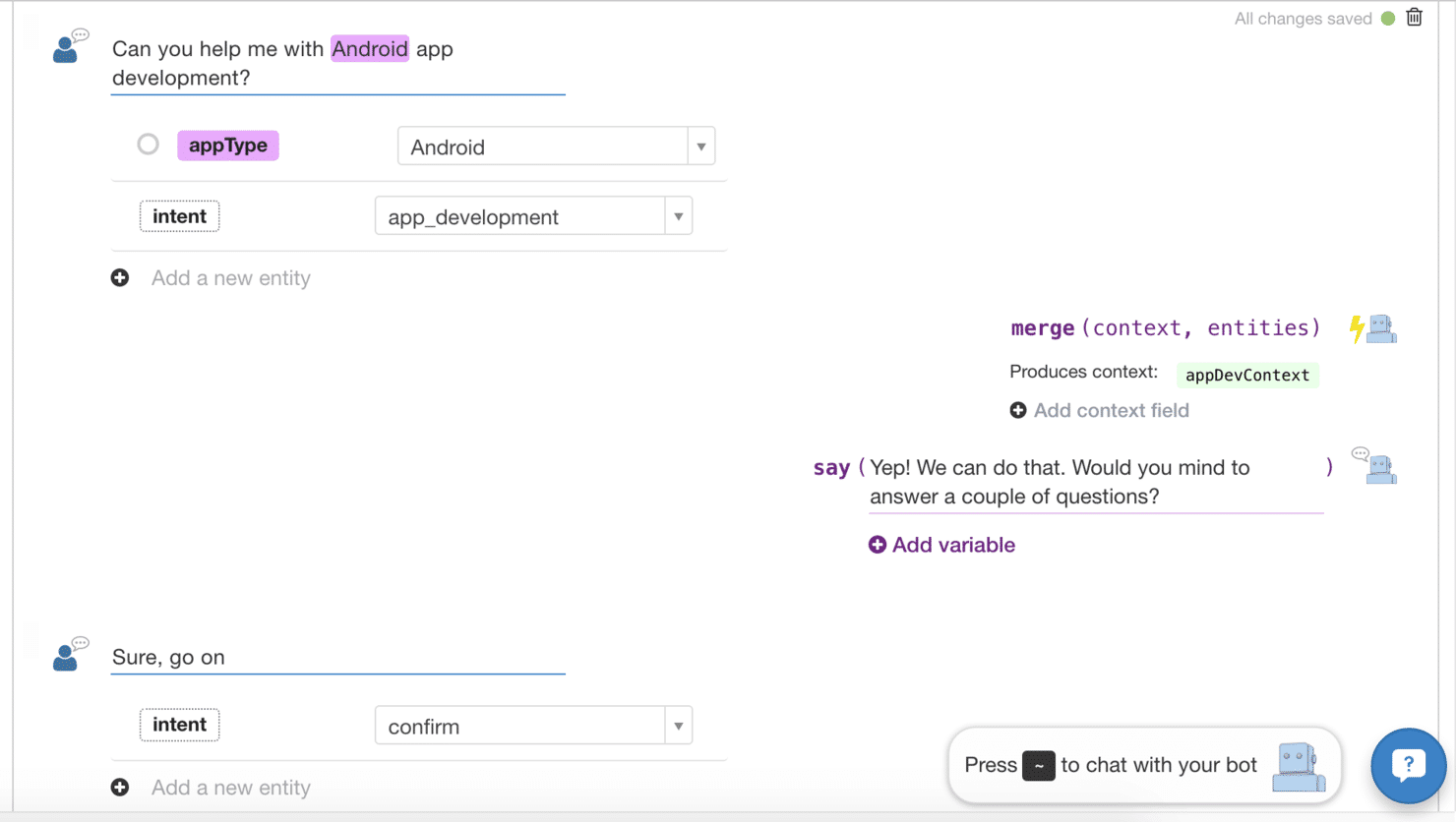

Building the conversation interfaces around story feels more natural and easier to follow than a separate intent string by the context variable. Under the hood, during the logic implementation, you still work extensively with the context and need to do all tasks required to maintain the conversation’s correct state.

In Wit.ai we can use Entities, Intents (it’s actually just a custom entity type here), Context and Actions concepts that together form the model based on Machine Learning, and statistics can be used later for understanding the language.

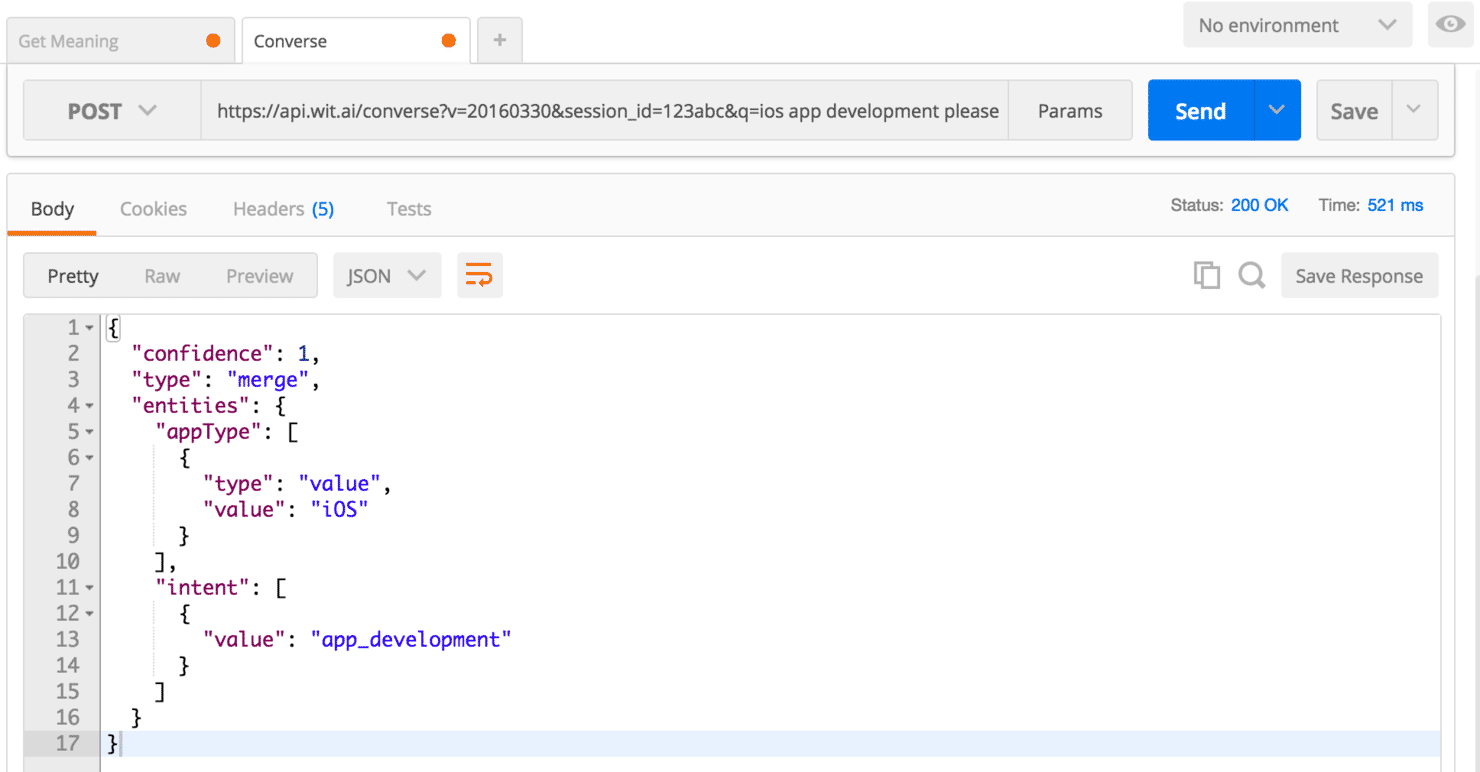

On the bot side, during the story definition, we can execute any action that we might need to fulfill the context, user action, and prepare data and/or states in the context. Effectively, the Wit.ai Converse API will resolve the user utterance and the given state into the next state/action of your system, thus giving you the tool to build a Finite State Machine that describes sequences of speech acts.

However, all actions are executed on our server, and Wit.ai just orchestrates the process and suggests the next call of state mutations based on the model that we’ve trained.

Everything, from understanding the user inputs to the training expressions and list of entities, is available through the extensive Wit.ai API.

Like other systems, Wit.ai provides a handy Inbox feature where you can access all incoming utterances from the users, and label them if they were not recognized correctly.

In one of the latest updates, Wit.ai introduced the chat UI for testing conversations so we can see steps that systems recognize, which helps during both the creation and the debugging of the model.

Wit.ai supports 50 different languages including English, Chinese, Japanese, Polish, Ukrainian and Russian.

Projects can be Open or Private, without any apparent limitations. Open projects can be forked and you can create you own version of the model on top of existing community projects.

The Wit.ai API is completely free with no limitations on request rates, thus it is a good choice for your next bot experiments.

UPDATE 2016-12-22: Wit.ai is continuously pushing new features and capabilities. Since the release of the first version of this article they’ve make a better builder for the Stories and added support for Quick Replies, Branches (if/else) and Jumps in Stories which is great for describing complex flows.

Api.ai – conversational UX Platform

Api.ai was created by a team who had built a personal assistant app for major mobile platforms with speech and text-enabled conversations.

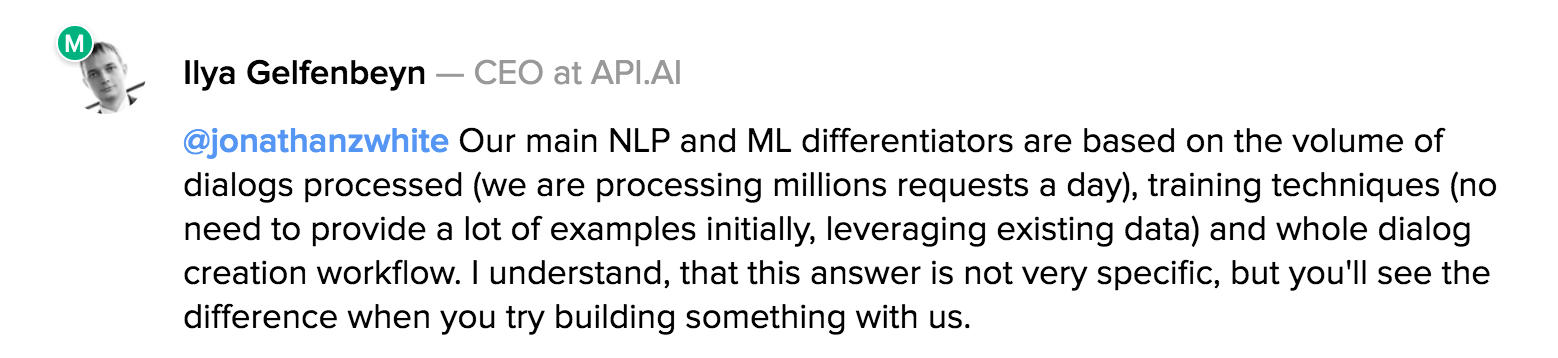

To give you a better understanding of how API is different from other platforms, here is the answer their CEO gave on Product Hunt:

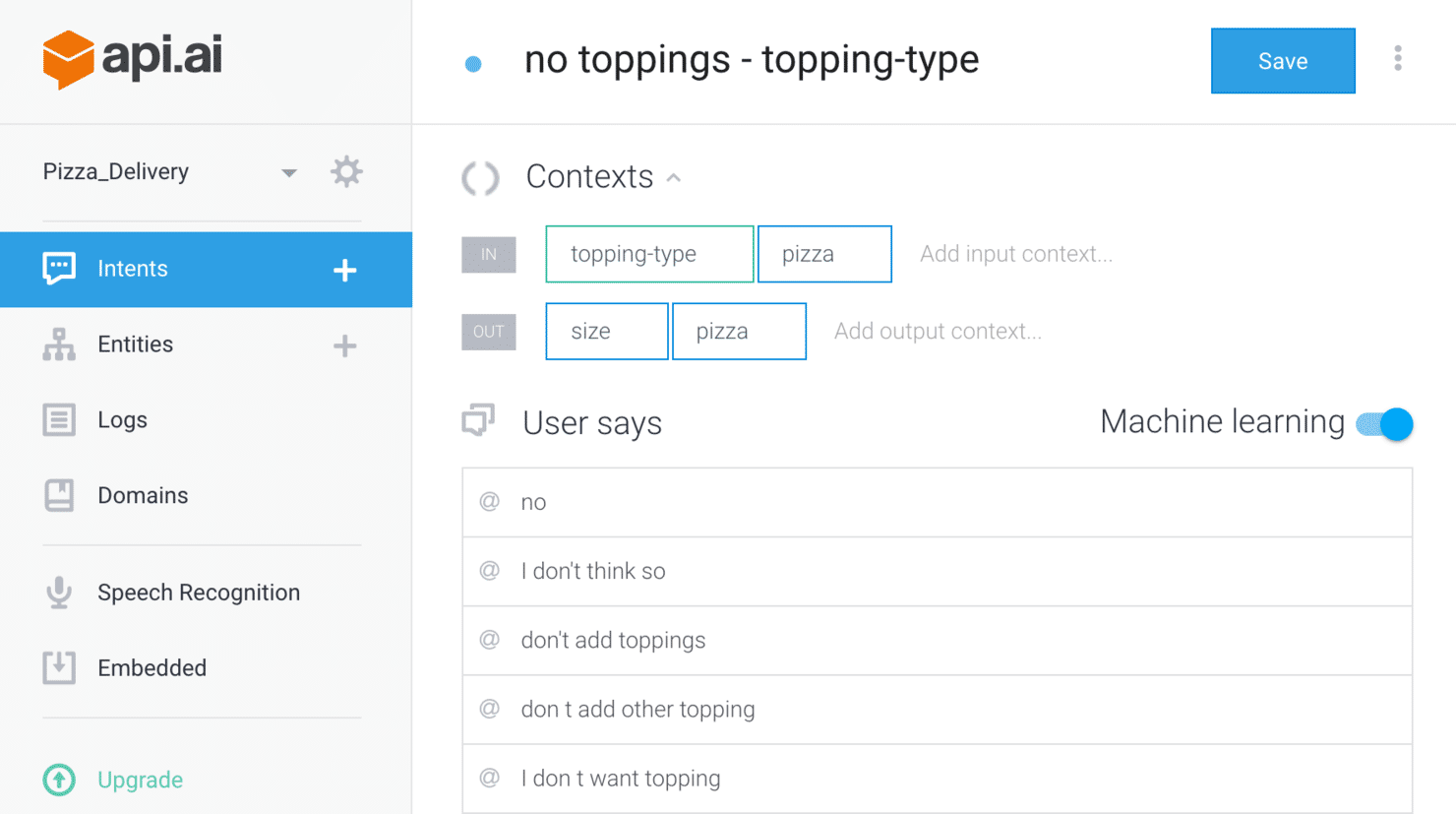

Indeed, the service provides all the features you might expect from a decent conversational platform including support of Intents, Entities, Actions with parameters, Contexts, Speech to Text and Text to Speech capabilities, along with machine learning that works silently and trains your model.

Everything starts from Agents that represent the model and rules for your application.

The interesting thing is that API.ai has built-in domains of knowledge (Intents with Entities and even suggested Replies) on topics like small talk, weather, apps and even wisdom. It means that your new Agent on the system can recognize these Intents without any additional training – and even provide you with the response text which you can use as the next thing your bot will say. There are up to 35 different domains with full English support and partial support for the other six languages.

When you create an Intent, you directly define which Context the Intent should expect and produce as a result. You can also define several speech responses which an agent will return to your app through the API, so you don’t even need to store such variations in your app.

Api.ai provides integrations with different bot platforms including Slack, Facebook Messenger, Kik, Alexa and Cortana.

For example, you can build the conversational flow completely on the platform and then deploy it automatically on Heroku, or use a pre-built Docker container with the app.

There is also an embedded integration mode available so you can have an agent that works without connection to the internet and is independent from any API. Just think about use cases like embedded hiking assistants or in-car assistants.

Api.ai looks like a decent solution that you can use for building sophisticated conversational interfaces. Like LUIS-beta from Microsoft or Wit.ai from Facebook, it’s Free with a limitation in bandwidth and speech recognition feature, though Preferred plan without limitation is also available by request.

UPDATE from Dec 1, 2016: Well, Google have bought Api.ai since the first version of this article. Good for the founders, but this means the community has lost the powerful independent NLP service, although a couple of other startups are emerging from the stealth mode.

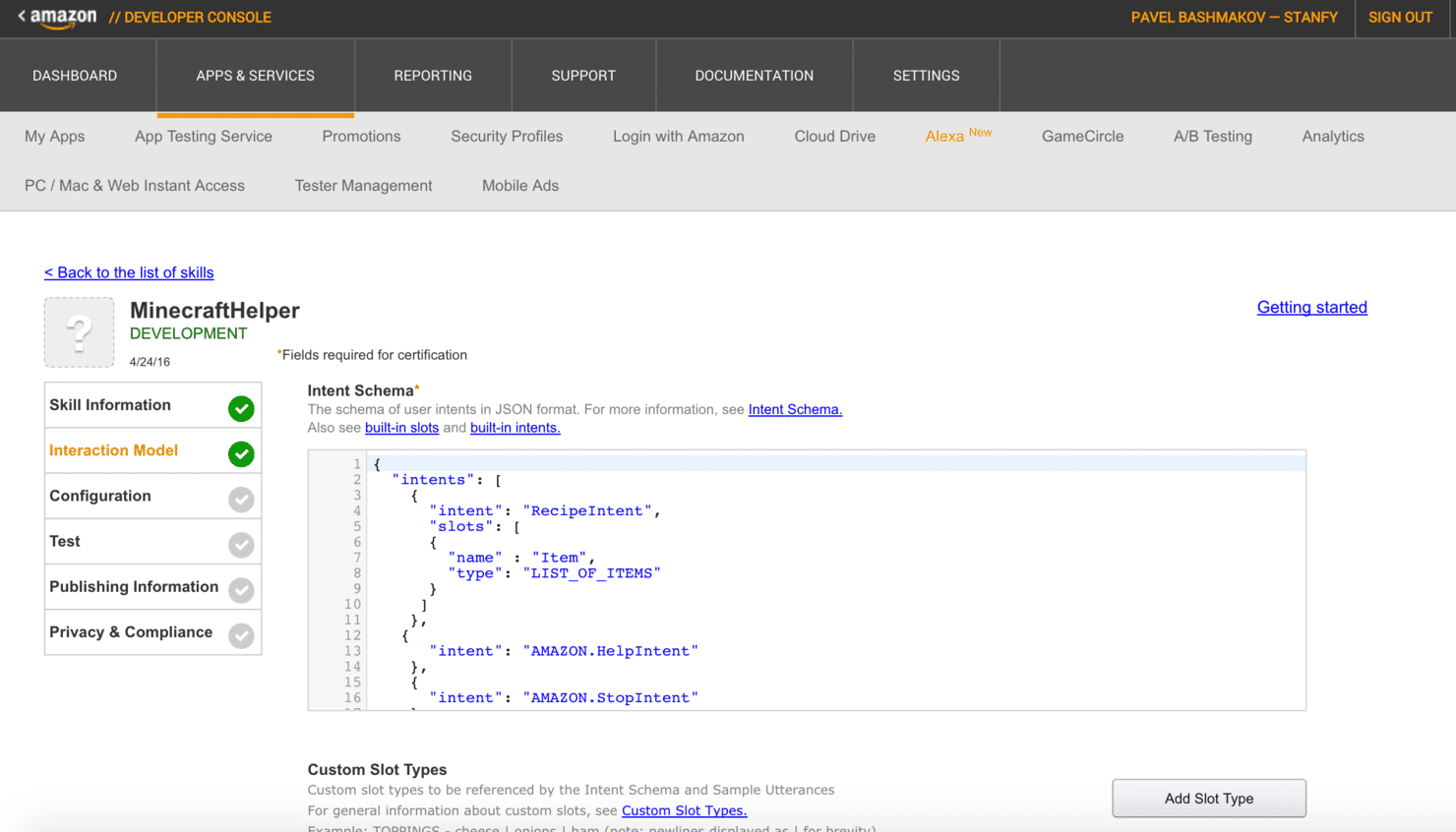

Amazon Alexa Skill Set

It only works with Amazon Alexa. At first glance, this looks like the simplest language processing algorithm available among all other systems, but it’s deployed, tested and exposed to more than 3 million Amazon Alexa users who are already using conversational interfaces on a daily basis.

With Amazon Alexa Skills Kit you can define Intents and Entities for your task. Alexa system recognizes an intent correctly with variations in words only when you provide every possible example of expressions that could exactly match how users might say it to Alexa. It feels like they are still working on their own version of machine learning in order to simplify the work needed for model training.

The great thing is that a whole new skill for Alexa could easily be built with AWS Lambda functions, that seamlessly integrates with the Alexa Skills Kit.

Anyway, Amazon Alexa Skills Kit is an outstanding system that you should keep in mind,following their development, because Amazon is currently a leading household platform for conversations and custom bot integrations, which they are aggressively pushing forward with new device offerings and features.

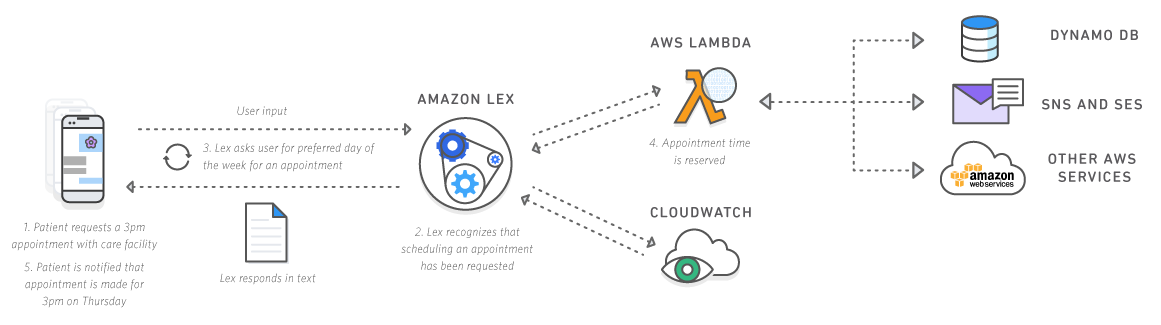

UPDATE from Dec 1, 2016: Yesterday, Amazon revealed Amazon Lex – a conversational interface API with NLP features and tight integration to Amazon services such as Lambda, Dynamo DB, SNS/SES and others. We’ll look into Amazon Lex internals once it becomes available.

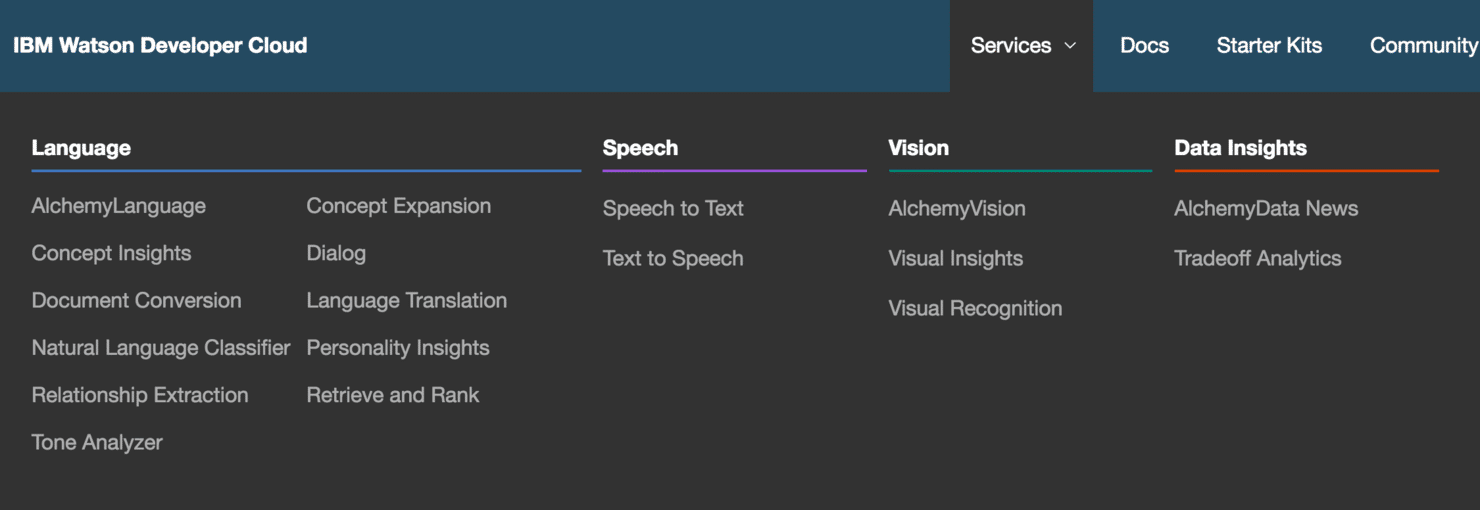

IBM Watson Developer Cloud Services

You probably remember the famous IBM Watson’s game when it won against two humans on the TV quiz show “Jeopardy” in 2011. So the good news is that IBM moved the technology behind the Watson into the cloud and released the set of API that you can use in your own conversational applications.

The API set includes language understanding offerings from a natural language classifier to concept insights and dialogue processing. There are a lot of building blocks that you can use in your application, but you probably will spend a fair amount of time integrating them into one solution.

We’ve used IBM Alchemy Language for sentiment analysis and keywords extraction for our experiments and it worked well. We think that IBM’s solution is the ideal choice for enterprises that want to be 100% sure of their API provider.

For a recent IBM Watson demonstration you can watch a fireside chat with Dr. John Kelly, who leads the Watson team at IBM, at TechCrunch Disrupt 2015 in San Francisco.

IBM Watson is, however, a costly solution and you can expect to pay up to $0.02 per API call in Dialogue API, so it may be too expensive to experiment with in building bots for Facebook Messenger when you still don’t have a working business model.

The full list of available API’s from IBM Watson Developer Cloud available here.

Ready to build a conversational bot for your business, but confused with the variety of platforms? Let’s talk!

Conclusion

In this article, we have seen that there are various systems available for building conversational interfaces.

Our personal preference goes to Wit.ai from Facebook and LUIS from Microsoft, as they provide all the necessary elements for building conversations and they are free (at least for now), so you don’t have to worry about the price.

Anyway, we would recommend you store all data needed for your model in a structured way in your own code repository. This means that later you can retrain the model from scratch, or even change the language understanding provider if needed. You just don’t want to be in a situation when a company shuts down their service and you are completely unprepared. Do you remember Parse?

For the end-to-end solutions that requires less code, we think Api.ai is the way to go. This is also a good option if you need embedded capabilities, avoiding dependence on an internet connection. We are also often using Chatfuel as it’s an easy to build builder of conversational flow with a powerfull JSON API integrations.

Alexa Skills Kit is proprietary for Amazon Echo devices, therefore you can’t use it with arbitrary bots at Slack or Facebook Messenger for language processing, but it is ideal for smart home bots that augment your kitchen or living room environment, and which are built specifically for Alexa. Luckily Amazon Lex will soon be available to the public, so it could pose a great alternative, especially if your infrastructure is already tapped into the AWS ecosystem.

IBM Watson will work smoothly in an enterprise environment when you need to feed large amounts of data, you have a decent budget and you want to have a reliable and proven service provider behind you.

Generally speaking, we expect to see many more platforms and API services for language understanding tasks in 2016, because the field is just beginning to heat up, with the major platforms newly announcing their bot platforms and frameworks.

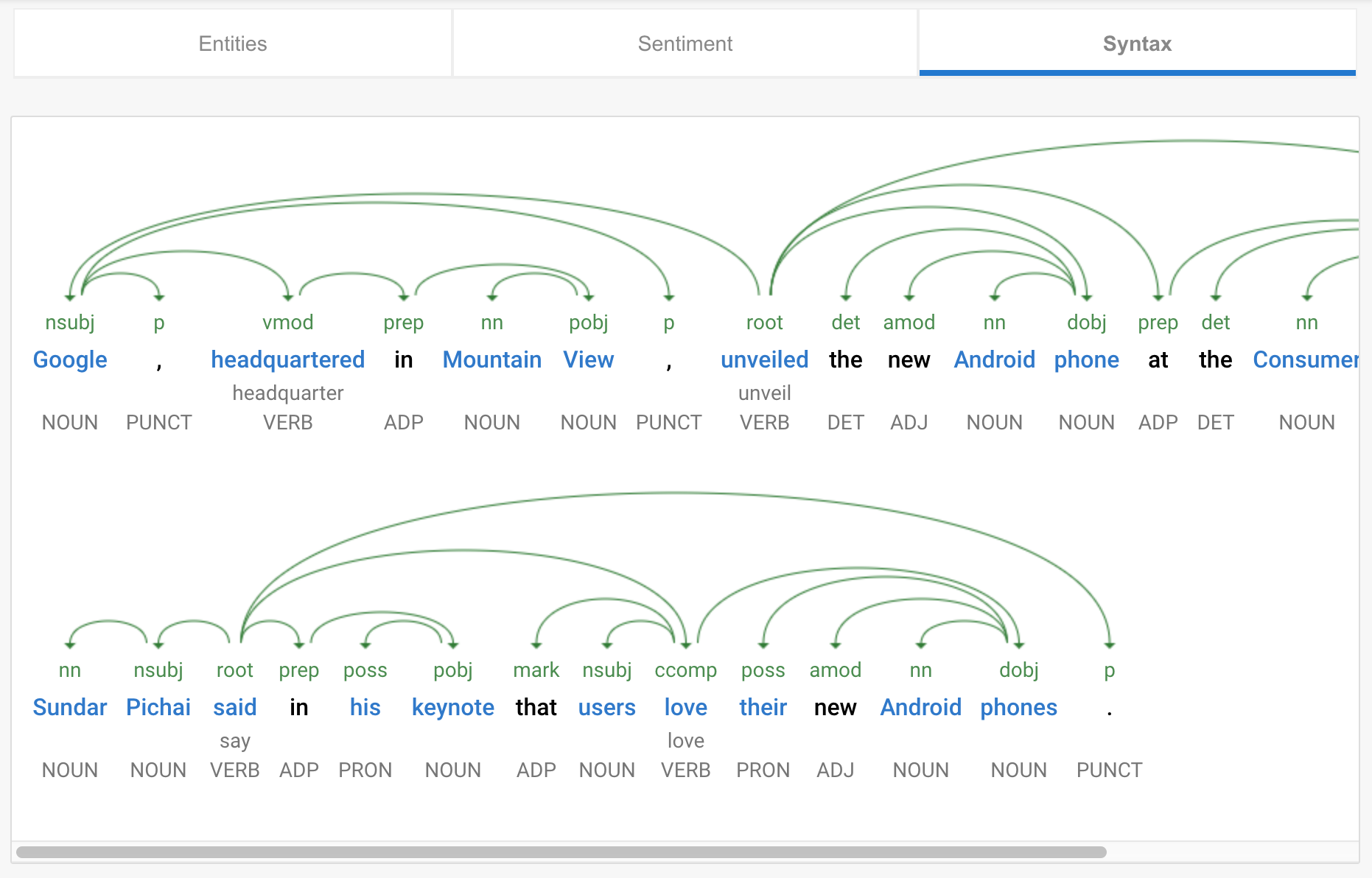

Since the first version of this article in May, 2016, Google have launched their own NLP service, Cloud Natural Language API, which is packed with text analytics, content classifications & relationship graphs, and deep learning models that you can use for your chatbot needs. We’ll review Google’s offering in a separate article.

Stay tuned and happy chatbot building.

Building our own bot

This article was written as part of our own challenge to build a smart bot with AI capabilities that can help people understand how to build a mobile app, give useful advice and provide an estimation of development and design costs.